Artificial Intelligence (AI) has witnessed remarkable progress since its inception in the 1950s, with the evolution of machine learning playing a crucial role in propelling its growth. The dynamic landscape of AI has ushered in significant transformations,giving rise to increasingly sophisticated AI models that emulate human-like capabilities. One standout model garnering recent attention is OpenAI’s ChatGPT—alanguage-basedAI that has made a substantial impact in the AI community. This blog post aims to intricately explore the technology powering ChatGPT, offering a comprehensive examination of its fundamental concepts.

ChatGPT:How OpenAI’s AI Language Model Creates Human-Like Text

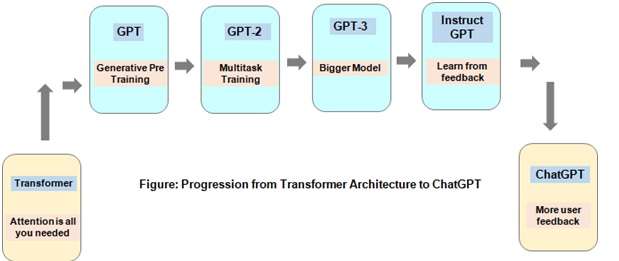

ChatGPT, created by OpenAI, operates as an AI language model employing deep learning to produce text resembling human language. It relies on the transformer architecture, a successful neural network design widely used in various Natural Language Processing (NLP) tasks. Throughextensivetrainingonavastdatasetoftext, ChatGPT is designed to generate language that is coherent, contextually suitable, and sounds natural.

How ChatGPT Works

ChatGPT uses three important technologies to understand and generate text: Natural Language Processing (NLP), Machine Learning, and Deep Learning. These technologies help ChatGPT learn from data and create text that sounds like humans.

Making Language Easier with Natural LanguageProcessing (NLP)

NLP is a way for ChatGPT to understand and use human language. It helpsthemodel create text that makes sense and sounds natural. ChatGPT uses techniques like tokenization, named entity recognition, sentiment analysis, and part-of-speech tagging to make its language more human-like.

Learning from Data: Machine Learning in Action

Machine Learning is like teaching ChatGPT to predict words based on what it learned from lots of text data. The model learns patterns from previous words in sentences to guess what the next word should be.

Getting Smarter with Deep Learning

Deep Learning is like training ChatGPT’s brain with a lot of information. It helps the model’s transformer architecture, a special way of learning, to understand and create text that is not only clear but also flows naturally.

ChatGPTArchitectureOverview

ChatGPT uses a special architecture called the transformer, which is like itsbrain.This idea came from a paper called “Attention is All You Need” by Vaswani and others. The transformer helps ChatGPT understand and work with sequences of data, like text. To bring this idea to life, ChatGPT uses a tool called PyTorch, a machine learning library.

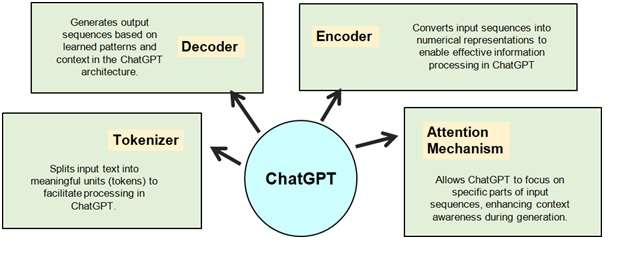

Breaking Down the Parts of ChatGPT

- The Starter Layer: Input Layer

This layer is like ChatGPT’s eyes. It takes in the text and turns it into numbers. This process, called tokenization, splits the text into smaller parts (tokens, like words) and gives each part a special number called a token ID.

- The Converter Layer: EmbeddingLayer

Now, each token gets transformed into a fancy set of numbers, sort of like a secret code. These special numbers, called embeddings, represent what the words mean.

- The Worker Layers: Transformer Blocks

Think of these as ChatGPT’s workers. They process the sequence of tokens to understand the text. Each worker (Transformer block) has two maintools:aMulti-Head Attention mechanism and a Feed-Forward neural network.

- Multi-Head Attention Mechanism: The Attention Checker

This tool helps ChatGPT focus on important words in the text. It checks each word’s importance by comparing them, like giving scores.

- Feed-Forward Neural Network: The Decision Maker

This network helps ChatGPT make sense oftheinformation.Ittransformstheinputina smart way, combining it with attention scores to create the final understanding of the text.

Putting It All Together: How ChatGPT Thinks

- ChatGPT uses many Transformer blocks in a row, letting it think in multiple rounds. The final step predicts what comes next in the text by looking at the whole sequence of words. It’s like ChatGPT saying, “Based on everything I’ve seen, here’s what I think should come next.”

- In simpler terms, ChatGPT reads, understands, and predicts by breaking down the text into smaller pieces, figuring out what’s important, and making smart decisions to create meaningful responses.

Understanding Tokenization in ChatGPT

Tokenization is like breaking down a sentence into smaller parts, called tokens. In ChatGPT, tokens are usually words or smaller parts of words, and each token gets a special number called a token ID. This process is crucial because it turns words into numbers, making it easier for ChatGPT’s brain to understand and work with them.

Why Tokens Matter in ChatGPT?

Token ID Magic in the Embedding Layer

The first step is giving each word or part a number. These numbers are likecodesthat ChatGPT understands. The model then uses these token IDs to create a special set of numbers, or embeddings, for each token. These embeddings capture what the words mean.

Tokens Guide the Transformer Blocks

The tokens and their embeddings guide ChatGPT’s understanding of the text. Think of tokens as puzzle pieces, and embeddings as the clues that help ChatGPT put the puzzle together. The model uses this information in the Transformer blocks to make smart predictions.

Choosing the Right Tokens for ChatGPT

The kind of tokens chosen and how they are split can really impact how well ChatGPT works.

There are two common methods:

- Word-BasedTokenization:Eachtokenrepresentsawhole

- Subword-Based Tokenization: Tokens represent smaller parts of words or evencharacters.Thismethod,likeinChatGPT,ishelpfulforunderstandingrare or unusual words that might not fit well with word-based tokenization.

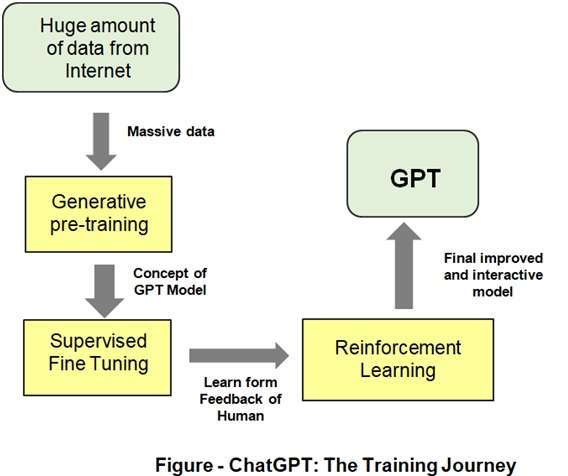

How ChatGPT Learns: The Training Journey

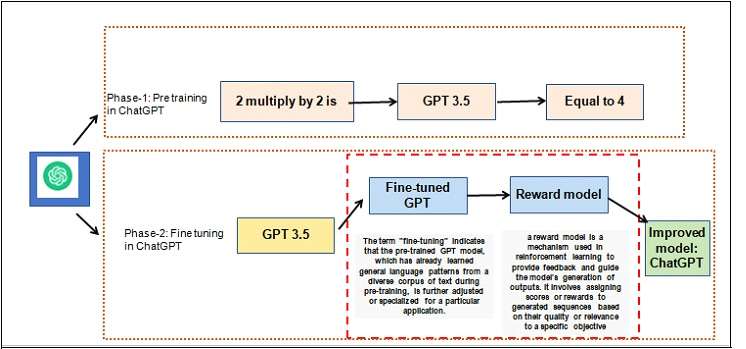

ChatGPT’strainingisacomplicatedprocesswithtwomainsteps:pre-trainingand fine-tuning. The goal is to help the model get really good at generating therightkindof text.

Step 1: Getting the Basics Right – Pre-training

In the first phase, called pre-training, ChatGPT learns about language and context by studyingabunchoftext.Imagineit’sliketeachingChatGPTtopredictthenextwordina sentence. This phase uses a big chunk of text data to help the model understand how words fit together. The special codes called token embeddings are learned during this phase.

Step 2: Specializing for the Job – Fine-tuning

After pre-training, ChatGPT goes through fine-tuning. This is like giving it extra training on a specific task, like answering questions or creating text. The idea is to adjust the model for the particular job and fine-tune its settings so it produces the right kind of results.

Keys to Success in Fine-tuning: Choosing the Right Start and Settings

1. Picking the Right Starting Point – The Prompt

ChatGPTneedsthe right information to get started, called a prompt. This sets the stage for what ChatGPT is supposed to do. Choosing the right prompt is super important to guide the model in the right direction.

2. Fine-TuningSettings-TheDetailsMatter

During fine-tuning, it’scrucialtousethecorrectsettings.Thingsliketemperature, which controls how random or focused the model’s responses are, play a bigrole.

After Training: ChatGPT in Action

Once all the training is done, ChatGPT isreadytobeusedindifferenttasks.Itstraining allows it to generate high-quality outputs, making it really useful for tasks like talkingto users or helping with writing.

What’s Next: Meet GPT-4

OpenAI is gearing up to release GPT-4, the latest and most advanced version in the GPT family. GPT-4 has more capabilities, with billions of parameters, making it even better at handling complex tasks like writing long articles or creating music with higher accuracy. Exciting things are on the horizon!

Conclusion:Embracing the Future of AI

As we witness the strides made by models like ChatGPT and anticipate the arrival of GPT-4, it’s evident that AI is entering an era of unparalleled sophistication.Thefusion of Natural Language Processing, Machine Learning, and Deep Learning has propelled these models to mimic human-like capabilities, revolutionizing how we interact with technology. The journey from understanding tokenization to fine-tuningmodelsmarksa significant leap in AI’s capabilities, promising even more remarkable applications in the future. With GPT-4 on the horizon, the realm of possibilities for AIcontinuestoexpand, opening doors to tasks previously deemed complex. The narrative of AI’s evolution is an exciting one, and the story is far from over.